Programmatically take screen shots of your entire website

Today I had the need to automate the generation of screen shots of an entire website. A project I was working on handed the system over to the client. We wanted to capture the site as it was when we delivered it before the client got into it. The site was about 120 unique pages at the time of delivery. I could have manually taken screen grabs, however, since I like to code, I opted for an approach with a bit more finesse.

ADVANCEMENTS IN .NET CORE

C# 10 & .net core 6 have brought a ton of new features as well as optimized the heck out of so many of the existing classes. I chose to create a Console App using .net core 6. Microsoft has reworked the base Console template so you don't even need any of the namespacing, class or constructors to get it setup. Instead, you can just start typing as if you're in the Main function. I have always been annoyed with how much boiletplate code you needed for a simple console app in the past. Looks like they have finally addressed it and I am happy to have code running within minutes.

In addition to the latest version of .net core, I also used a fantastic nuget package called PuppeteerSharp, which is a system that automates browsers for a variety of programmable uses.

Finish the setup

Finally, to finish setting this project up, all we need is a list of urls in a text file. I used Screaming Frog to spider the site and created my list of URLs, though you could also just grab the urls from the sitemap.xml file. However you do it, put the urls into a text file with one url per line.

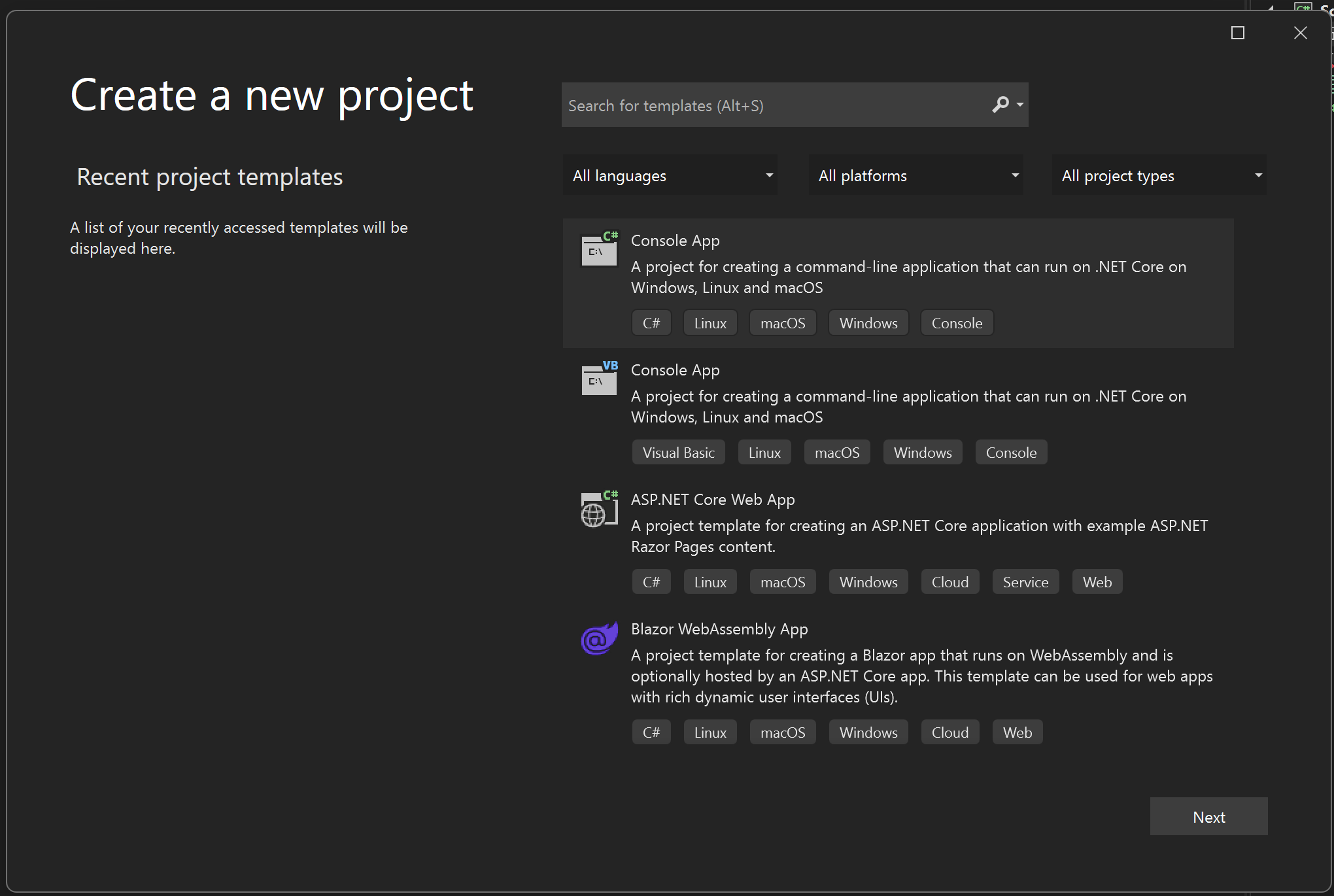

CREATE A CONSOLE APP

Show me the code

Open up the Program.cs file and delete its contents. Then paste in the following code and notice the lack of boilerplate:

using PuppeteerSharp;

using System.IO.Compression;

Console.WriteLine("Fetching...");

// basic variables needed in this program

var domain = "https://mywebsite.com";

var inputFile = "links.txt";

// read in the csv

var lines = File.ReadAllLines(inputFile);

// get the puppeteer browser

using var browserFetcher = new BrowserFetcher();

await browserFetcher.DownloadAsync();

await using var browser = await Puppeteer.LaunchAsync(

new LaunchOptions { Headless = true });

await using var page = await browser.NewPageAsync();

// setup the base ViewPort...change to anything that works for your needs

await page.SetViewportAsync(new ViewPortOptions

{

Width = 1240,

Height = 2000

});

// make sure the output dir exists

if (!Directory.Exists("screenshots"))

Directory.CreateDirectory("screenshots");

if (!Directory.Exists("export"))

Directory.CreateDirectory("export");

// loop through lines

foreach (var line in lines)

{

// remove the domain from the filename

var l = line.Replace(domain, "").Trim();

// remove beginning and ending slashes

if (l == "/")

l = "home";

if (l.EndsWith("/"))

l = l.Substring(0, l.Length - 1);

if (l.StartsWith("/"))

l = l.Substring(1, l.Length - 1);

// create the filename and filepath and make sure the directory exists

var filename = (l.Replace("/", "\") + ".png");

var filepath = Path.Combine("screenshots", filename);

var dir = new FileInfo(filepath).Directory?.FullName;

if (dir == null)

continue;

if(!Directory.Exists(dir))

Directory.CreateDirectory(dir);

// show progress

Console.WriteLine(filepath);

// load up the web page in the headless web browser and capture a screenshot of it

await page.GoToAsync(line);

await page.ScreenshotAsync(filepath, new ScreenshotOptions() { FullPage = true });

}

// zip it all up

var startPath = @".\screenshots";

var zipPath = @".\export\screenshots.zip";

// remove the file if it already exists from a previous run

if(File.Exists(zipPath))

File.Delete(zipPath);

// zip up the entire directory structure

ZipFile.CreateFromDirectory(startPath, zipPath);

Console.WriteLine("Done!");

Copy

That's it! You'll have a nice zipped up representation of your website in png format.